Mastering Caching: Strategies, Patterns & Pitfalls

This guide covered the most popular caching strategies, cache eviction policies, and pitfalls you might encounter when implementing a cache for your system.

Cache Patterns

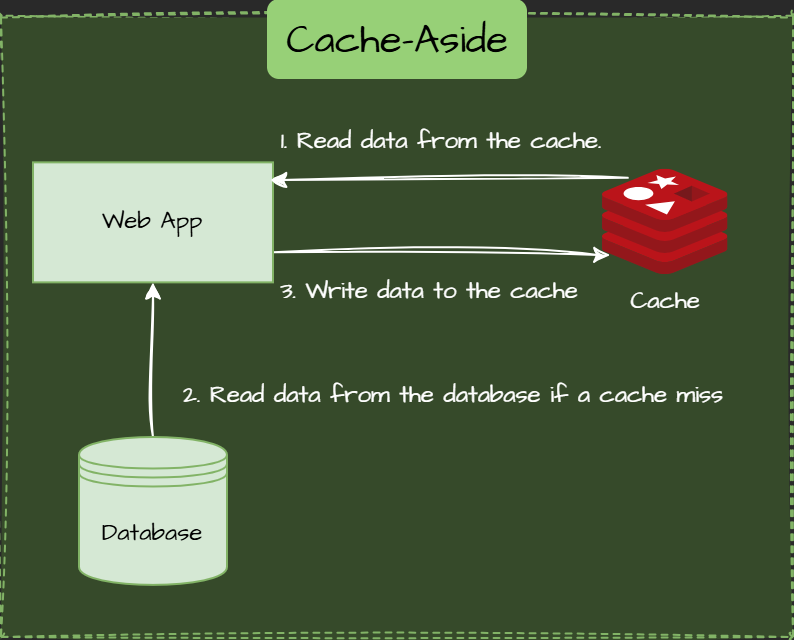

Cache-Aside (Lazy Loading) Pattern

Cache-aside loads data into the cache on demand. If data isn't in the cache (cache miss), the application fetches it from the database, stores it in the cache, and returns it.

The flow for Cache aside are the following:

- Read data from the cache.

- Read data from the database if a cache miss

- Write missed data to the cache

- Return data to UI.

Real-world Example:

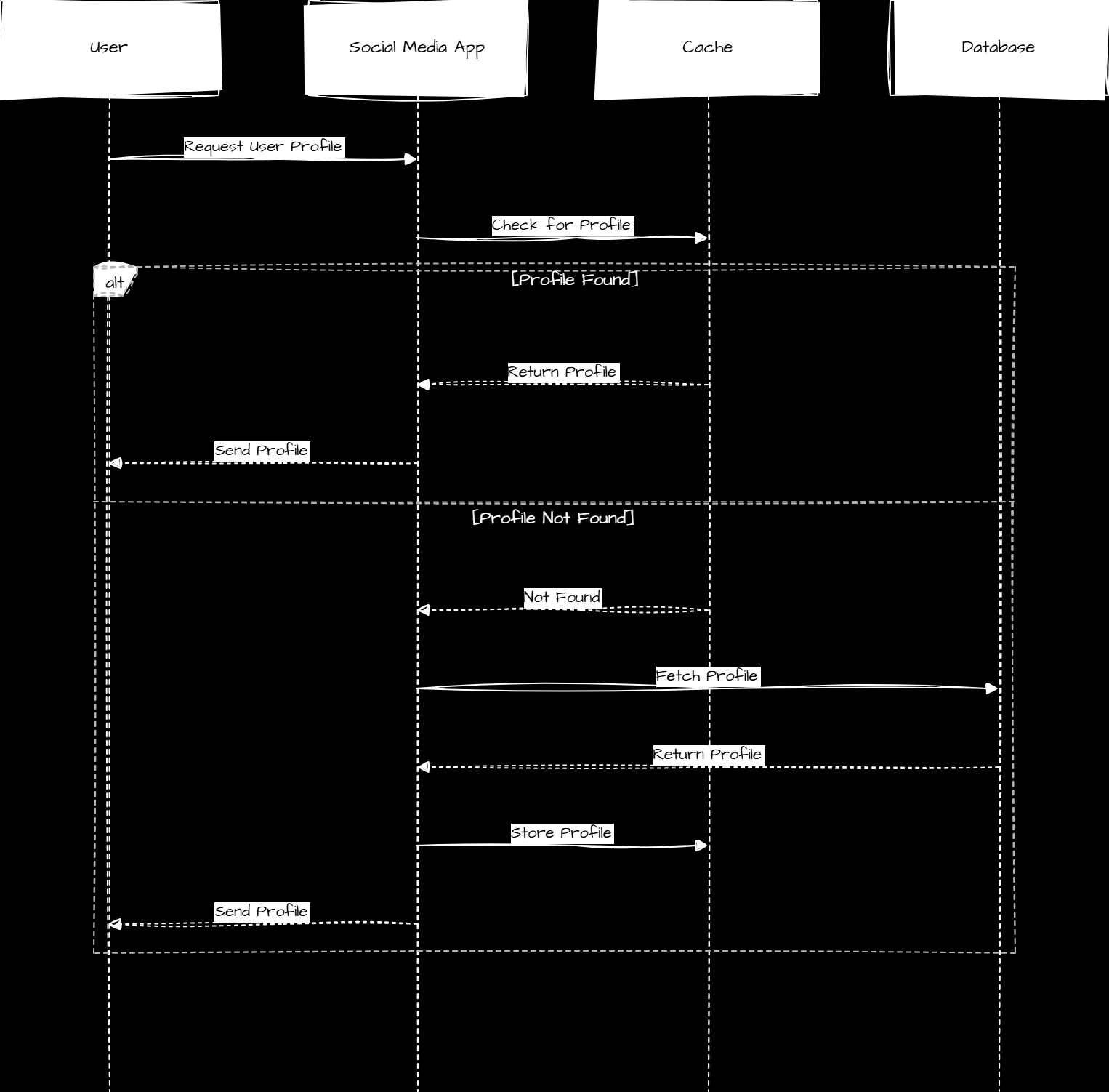

A social media app caches user profiles. When a profile is requested, the app first checks the cache. If it isn't found, the app retrieves it from the database, caches it, and serves subsequent requests quickly.

Use Cases

- General purpose caching

- You have large data volumes and want to avoid caching frequently inaccessible data.

Cache-Aside Pros:

- Reduces unnecessary memory usage.

- Simple to implement.

Cache-Aside Cons:

- Higher latency on initial requests.

- There is a possibility of stale data.

- Vulnerable to cache stampede if many requests come in during a cache miss

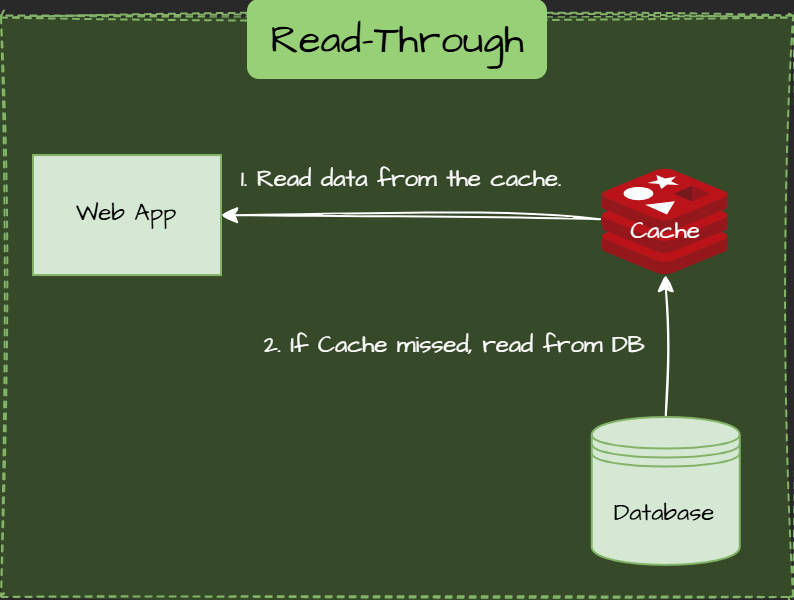

Read-Through Pattern

Similar to Cache-Aside, but the app doesn't interact with the database. The cache is does.

Use Cases

- Read-heavy workloads

- A high cache miss rate is acceptable

Read-Through Pros:

- Transparent cache management.

- Simpler application logic.

Read-Through Cons:

- Dependency on caching provider.

- Complexity in debugging cache issues.

- Can still suffer from stale data if the provider doesn’t handle invalidation well.

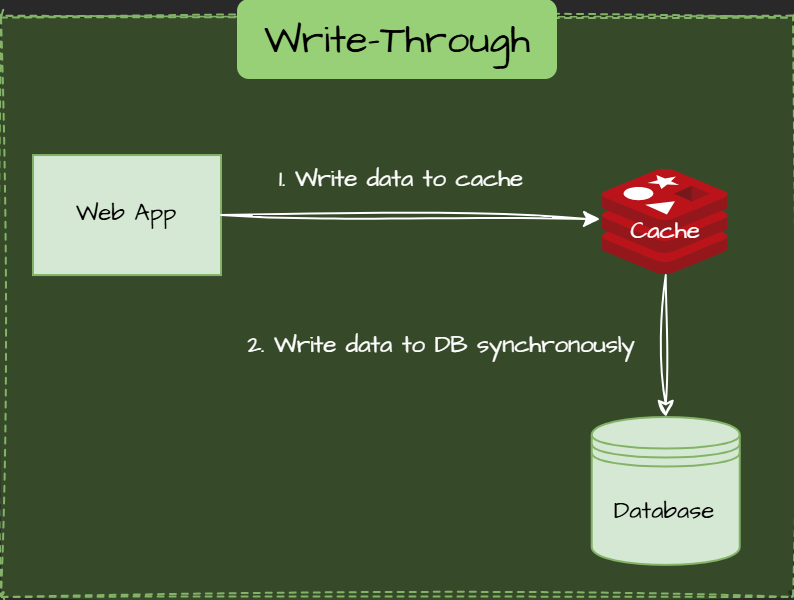

Write-Through Pattern

Data is simultaneously written to the cache and database, ensuring consistency but slightly increasing latency.

Use Cases

- A low number of writes expected

- Data consistency is critical, and additional latency is acceptable.

Note: Not suitable for high-performance applications where even minimal latency significantly impacts user experience or system throughput.

Write-Through Pros:

- Strong consistency.

- Simple recovery from cache failures.

Write-Through Cons:

- Increased write latency.

- Potential database overload with frequent writes.

- Cache pollution with infrequently accessed writes.

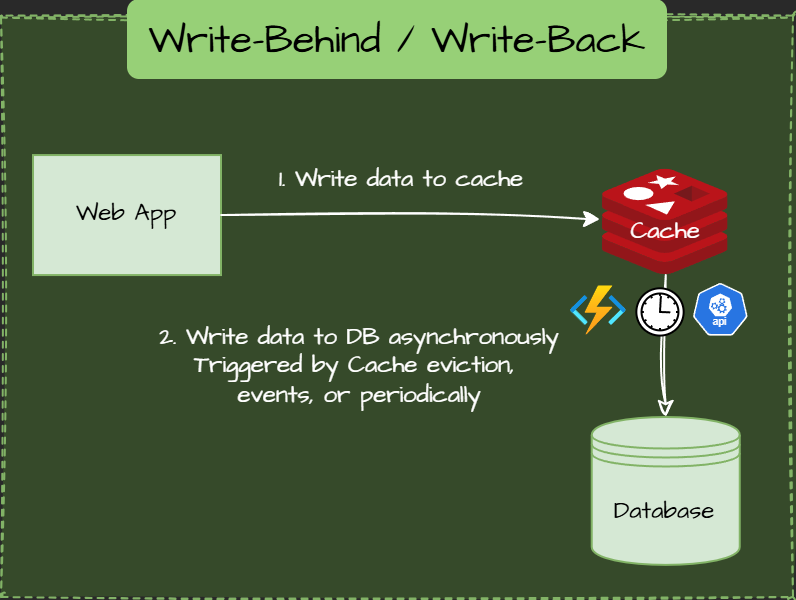

Write-Behind / Write-Back Pattern

Data is written in the cache first and asynchronously persists in the database.

Async action is triggered periodically by cache eviction or other events.

Use Cases

High write throughput with acceptable delays in database consistency.

Write-Behind Pros:

- Optimized write performance.

- Reduced database load.

Write-Behind Cons:

- There is a risk of data loss if the cache fails.

- Complexity in handling eventual consistency.

- Inconsistent state between cache and DB during system failures.

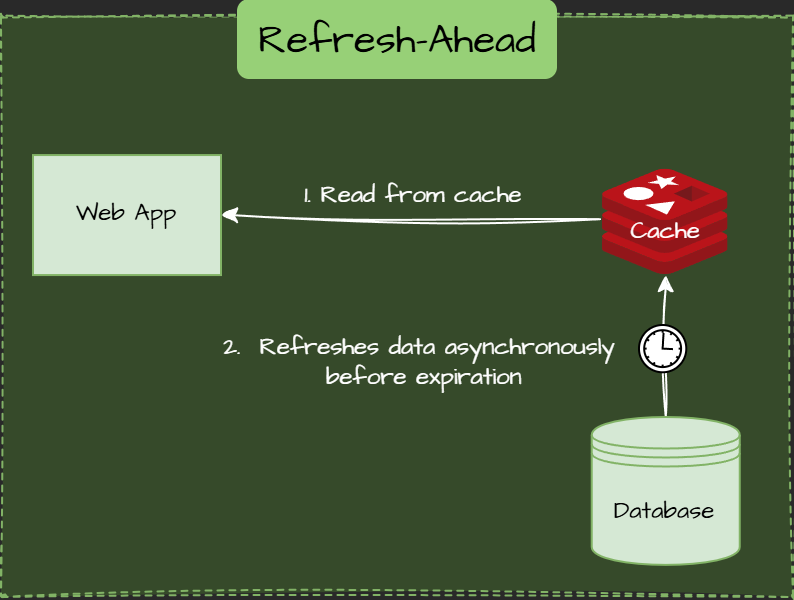

Refresh-Ahead Pattern

Cache proactively refreshes data asynchronously before expiration.

Use Cases

Minimizing latency for critical cached data while maintaining data freshness.

Real-world Example:

An e-commerce site proactively refreshes product pricing and inventory details to ensure customers see up-to-date data immediately.

Refresh-Ahead Pros:

- Reduced latency for critical data.

- Maintains data freshness consistently.

Refresh-Ahead Cons:

- Increased implementation complexity.

- Higher resource utilization.

- Inefficient if data refreshes but isn’t reused.

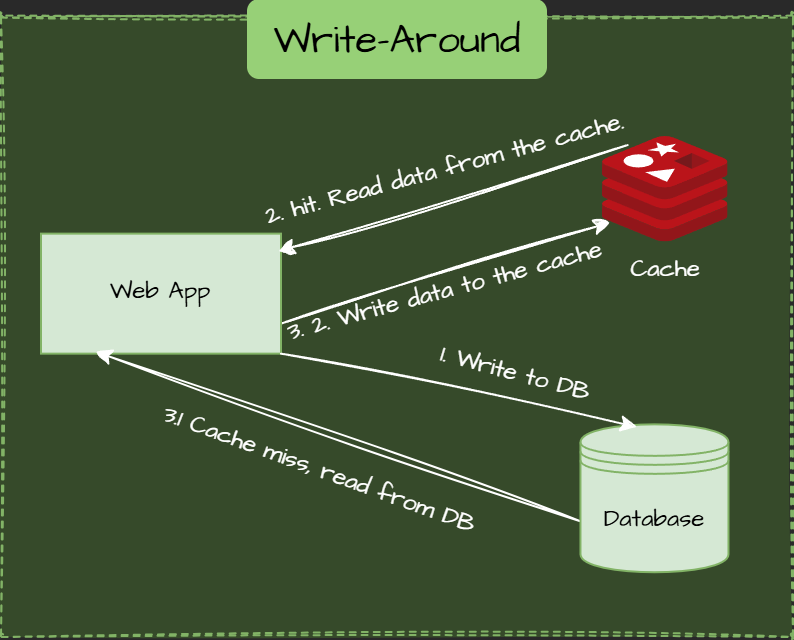

Write-Around Pattern

Write go only to the database. The cache is updated only on a subsequent read (if at all).

Use Cases

- No data update expected

- A low number of reads expected

Write-Around Pros:

- Avoids polluting the cache with data that might never be read.

- Reduced risk of data loss

Write-Around Cons:

- Higher read latency on first access.

- Risk of cache miss immediately after a trite.

Cache Eviction Strategies

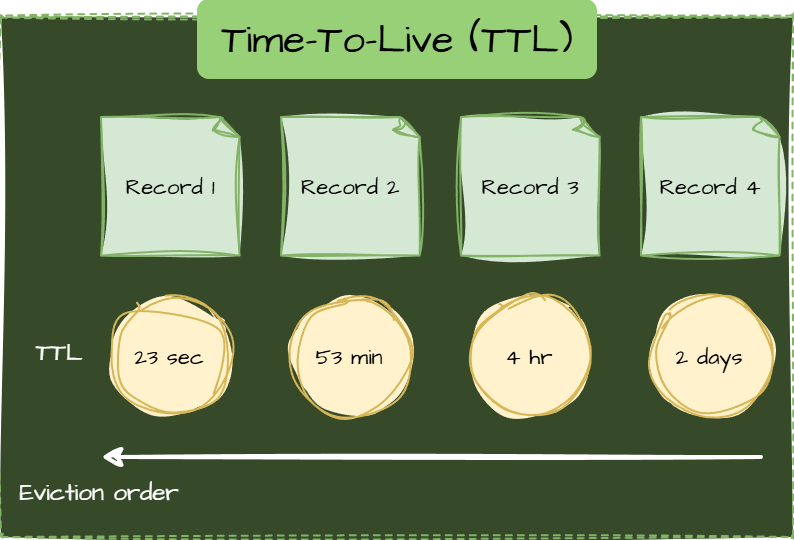

Time-To-Live (TTL)

Each cache entry has a fixed lifespan and is evicted once it expires.

Use Cases

- Session Management

- TTL ensures data freshness by automatically removing outdated responses.

- DNS records use TTL to update host-to-IP mappings periodically.

TTL Pros:

- Predictable, easy to manage

TTL Cons:

- Frequently accessed data may expire before it's needed

- Unused data stays in the cache until TTL expires, even if it's never accessed.

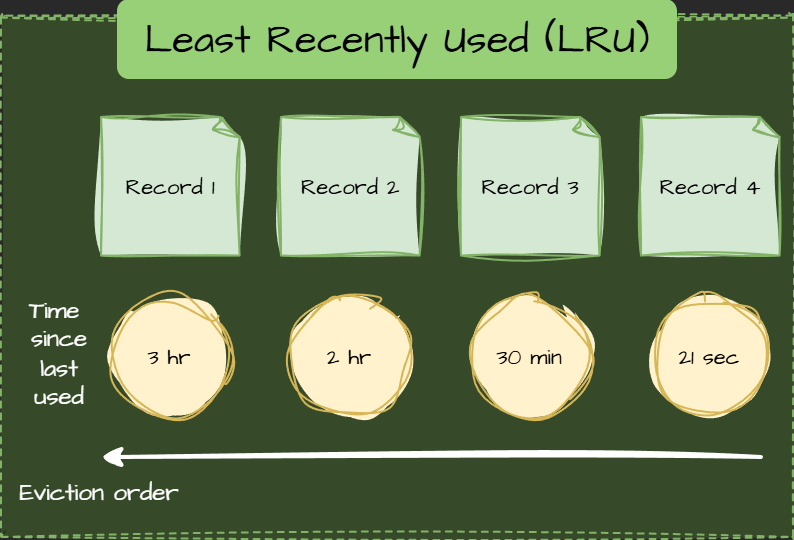

Least Recently Used (LRU)

LRU assumes that recently accessed data is more likely to be accessed again and removes items that haven't been accessed for the longest time.

Use Cases

- Recent access patterns are a strong indicator of future use.

LRU Pros:

- Keeps hot data in cache

LRU Cons:

- Tracking access order requires overhead

- Items accessed at regular intervals may get evicted.

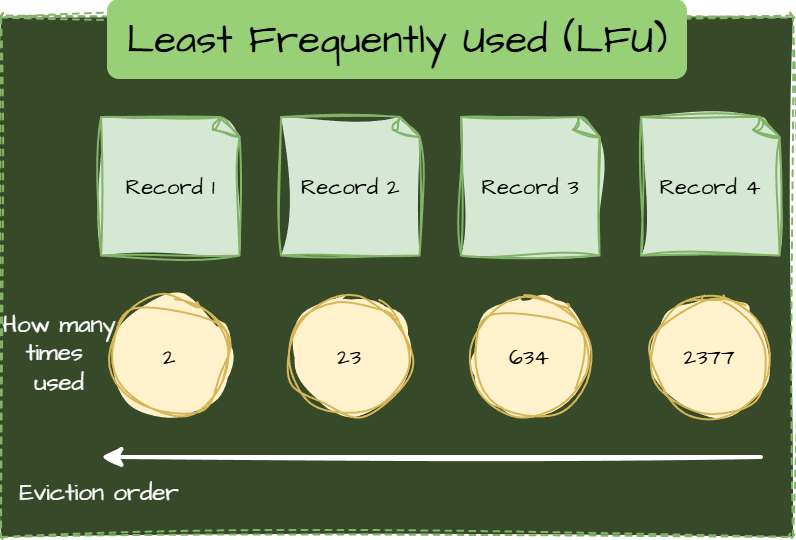

Least Frequently Used (LFU)

LFU Prioritizes Popular Items and evicts the least accessed items over time.

Use Cases

- Frequently accessed content remains cached.

- E-commerce Product Listings: Popular products are cached for quick retrieval.

- Machine Learning Caches: Keeps frequently used datasets in memory.

LFU Pros:

- Prioritizes frequent access

LFU Cons:

- Tracking usage counts adds complexity

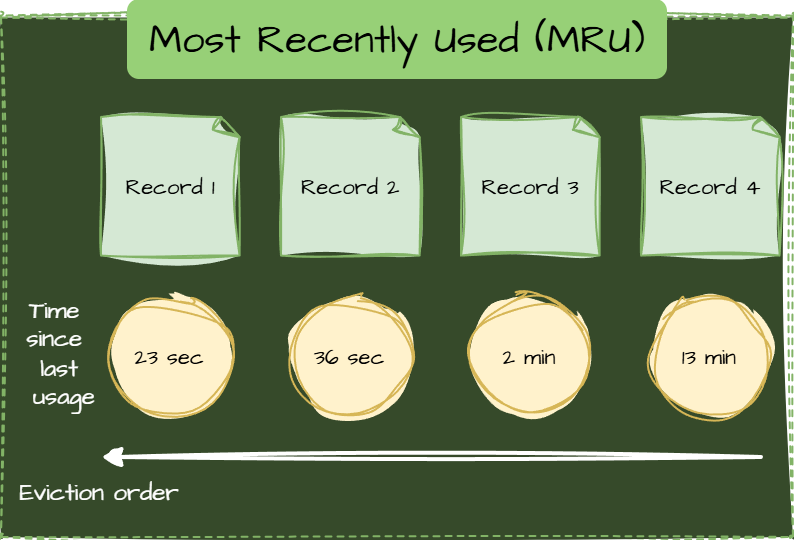

Most Recently Used (MRU)

Removes the most recently accessed item. New data is unlikely to be accessed again soon.

Use cases

- Streaming Services: The last-played media might not be needed again soon.

- Batch Processing Systems

- New data is unlikely to be accessed again soon.

MRU Pros:

- Useful for specific workflows

- Efficient when older data is more valuable than recently accessed data.

- Less effective for general caching scenarios compared to LRU.

MRU Cons:

- Often the opposite of typical cache patterns

Segmented LRU (SLRU)

It divides the cache into two parts: probationary and protected. New items start in probationary, and frequent access promotes them to protected.

Use cases

- You want a balanced LRU for mixed access patterns.

- Adapts better than pure LRU or LFU.

- Used in Memcached and Redis for efficient cache management.

SLRU Pros:

- Hybrid approach adapts to various scenarios

- Minimizes frequent eviction of valuable data.

SLRU Cons:

- Requires careful tuning and extra memory

In-Memory Caching

In-memory caching stores data in the application memory for extremely fast retrieval.

Use cases

- Frequent and fast data access is critical.

- The volume of cached data is manageable.

Pros:

- It has an extremely fast access speed.

- Ideal for real-time applications.

Cons:

- Limited by memory capacity.

- Data loss on app restarts or crashes.

Browser Caching

Browser caching stores web assets locally in a user's browser to improve page load times.

Use cases

- Serving static assets like images, scripts, and style sheets.

- Enhancing user experience by reducing load times.

Pros:

- Faster page loads.

- Reduces server load and bandwidth usage.

Cons:

- It can serve stale content if it is not appropriately managed.

- Complexity in cache invalidation strategies.

Common Pitfalls to Avoid

- Stale Data: Without proper TTLs or refresh-ahead logic, your cache may serve outdated information. Always define expiration policies and consider cache busting for dynamic content.

- Cache Stampede: Many clients may simultaneously hit the database when a popular item expires. Avoid this by implementing locking (e.g., mutex) or preemptive refresh techniques.

- Over-Caching: Caching too aggressively can waste memory and introduce complexity. Focus on high-read, expensive-to-compute data.

- Memory Leaks: You can exhaust available memory if you forget to evict unused data or rely on unbounded in-memory caches. Always use an eviction policy like LRU or TTL.

- Inconsistent State: The cache and database can become out of sync, especially in write-behind strategies. Ensure write operations are durable or fallback logic is in place.

- Cache Miss Penalty: If the cache misses, resulting in slow or costly operations, the user experience may suffer. Optimize fallback paths and consider using a warm-up cache.

- Poor Invalidation Logic: Incorrect or missing invalidation leads to stale or incorrect data. Use proper keys and define clear invalidation rules.

- Cold Start Delays: A cold cache may significantly impact deploying or restarting performance. Consider pre-warming the cache with essential data.

Additional Tips:

- Monitor cache hit/miss rates to identify optimization opportunities.

- Consider hybrid caching approaches tailored to specific scenarios.

- Evaluate trade-offs between complexity and performance carefully.